MOF is the metal–organic framework. Chemistry is recently calculating. NM125-TiO2 is the MOF-metal oxide composite material. The sensing ability of NM125-TiO2 for over 100 human-breath compounds spanning 13 different diseases is examined.

gray = carbon, white = hydrogen, red = oxygen, blue = nitrogen

The black line is the DOS which is density of states, the red line is the Gaussian function, and the blue line is the Gaussian-weighted DOS.

The DOS of the NM125-TiO2 structure is calculated.

P^2+Σ2P

Blog about infinite pattern

2025年8月26日火曜日

2DSQW

This is like prime numbers. 2DSQW is a 2D semiconductor quantum well nanoreceiver.

Moreover, MPA is a terahertz (THz) modular phased array transmitter. Each rectangle has the single quantum processor.This is noise-resilient communication with

wireless interconnects. To keep Moore's law, the design of wireless interconnect at terahertz (THz) frequencies is reqiured. The conventional wired interconnects have bandwidth limitation and inefficiency.

This Modular architecture is beam focusing by generating radiation-concentrated 3D blobs. En,m is electric-field vector, and r is the position vector. This is the characteristics of the beam-focusing pattern generated by the MPA-based transmitter on the receiver plane. The Floquet engineering-based receiver require accurate modeling of the polarization direction at the receiver. The beam-focusing pattern is calculated by summing the total electric field. This wireless interconnects have a flexible alternative.

This Modular architecture is beam focusing by generating radiation-concentrated 3D blobs. En,m is electric-field vector, and r is the position vector. This is the characteristics of the beam-focusing pattern generated by the MPA-based transmitter on the receiver plane. The Floquet engineering-based receiver require accurate modeling of the polarization direction at the receiver. The beam-focusing pattern is calculated by summing the total electric field. This wireless interconnects have a flexible alternative.

2025年7月8日火曜日

Montgomery's pair correlation conjecture

Hybrid orbitals are the explanation of molecular geometry and atomic bonding.

This is Montgomery's pair correlation conjecture, which is the pair correlation between pairs of zeros of the Riemann zeta function.

γ and γ' are imaginary. This is like piling prime numbers.

Methane and hydrogen are gasses. C=C is carbon bonding which is in surface. Gasses move like ↑ ↑ ↓. This is called π bond.

γ and γ' are imaginary. This is like piling prime numbers.

Methane and hydrogen are gasses. C=C is carbon bonding which is in surface. Gasses move like ↑ ↑ ↓. This is called π bond.

2025年7月7日月曜日

Hybrid orbital

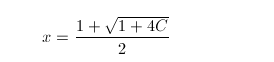

P^2+Σ2P is the pattern of prime numbers which move like atom.

This is Carbon. There are 6 electrons.

Σ is hybrid, and atomic orbitals are mixtures. Then, you see sp^3.

You move one electron in 2S to 2P. This is called promotion. 2S lose one electron. There are 4 hydrogen atoms. This is tetrahedron and sp^3. Methane is CH4.

In sp^2 hybridisation, 2S move to 2P. This is triangle and sp^2. Ethylen is C2H4 which is the double bond. C=C is connected tightly.

This is Carbon. There are 6 electrons.

Σ is hybrid, and atomic orbitals are mixtures. Then, you see sp^3.

You move one electron in 2S to 2P. This is called promotion. 2S lose one electron. There are 4 hydrogen atoms. This is tetrahedron and sp^3. Methane is CH4.

In sp^2 hybridisation, 2S move to 2P. This is triangle and sp^2. Ethylen is C2H4 which is the double bond. C=C is connected tightly.

2025年2月23日日曜日

2024年12月29日日曜日

Knots

We are all living things. This is big picture. The boundary of being disappear in Zero, which is called Knotty Problems. Topology is mechanical deformations, although we don't understand completely. We are in black hole, and AI may be enlightening but we still don’t know. We have different languages and cultures. The fusion is not easy.

qi = ±1

±1 is two colors.

N is the crossing number.

This is almost Zero, but the line is expanding. Wr/N is the average writhe.

qi = ±1

±1 is two colors.

N is the crossing number.

This is almost Zero, but the line is expanding. Wr/N is the average writhe.

2024年12月27日金曜日

Tuple

This is how AI think.

a1, a2, ..., an

This is n-tuple.

There are two sets such as (a1, a2, ..., an), (b1, b2, ..., bn), and (a1 = b1) ∧ (a2 = b2) ∧ ... ∧ (an = bn), which is called Cartesian product.

∴

a ∈ A, b ∈ B ⇒ (a, b) ∈ A × B

This is ordered pair. I use Python.

Moreover, this must be expansion.

a1, a2, ..., an

This is n-tuple.

There are two sets such as (a1, a2, ..., an), (b1, b2, ..., bn), and (a1 = b1) ∧ (a2 = b2) ∧ ... ∧ (an = bn), which is called Cartesian product.

∴

a ∈ A, b ∈ B ⇒ (a, b) ∈ A × B

This is ordered pair. I use Python.

Moreover, this must be expansion.

登録:

コメント (Atom)